Research

(With a little effort, this page might work itself into reasonable shape.

Due to perpetual lack of time, I am always behind in updating it.

The information below, though, should suffice to whet your appetite.

If you want more information on any of these projects, please drop me a note.)

The overarching research umbrella of the

Parallel Architecture Group @ Northwestern (PARAG@N)

is energy-efficient computing.

At the macro scale, computers consume inordinate amounts of

energy, negatively impacting the economics and environmental footprint of computing.

At the micro scale, power constraints prevent us from riding and extending Moore's Law. We

attack both problems by identifying sources of energy inefficiencies and

working across the hardware and software stacks to address them.

Thus, our work extends from novel devices all the way to application software

through circuit and hardware designs, compilers and runtimes, OS optimizations, and programming languages.

Our path has taken us from classical conventional computing, to nano-photonics in computer architectures,

and more recently to Quantum Systems. The sections below attempt to elaborate more on our research directions.

We are also always in need for brilliant, passionate Ph.D. students to work with us.

If you find any of the projects below interesting, consider applying to our program.

A (quite old by now) overview of our research at PARAG@N was presented at an

invited talk at IBM T.J. Watson Research Center and Google

in March 2012. Many exciting new developments have happened since then, but the talk is a good starting point.

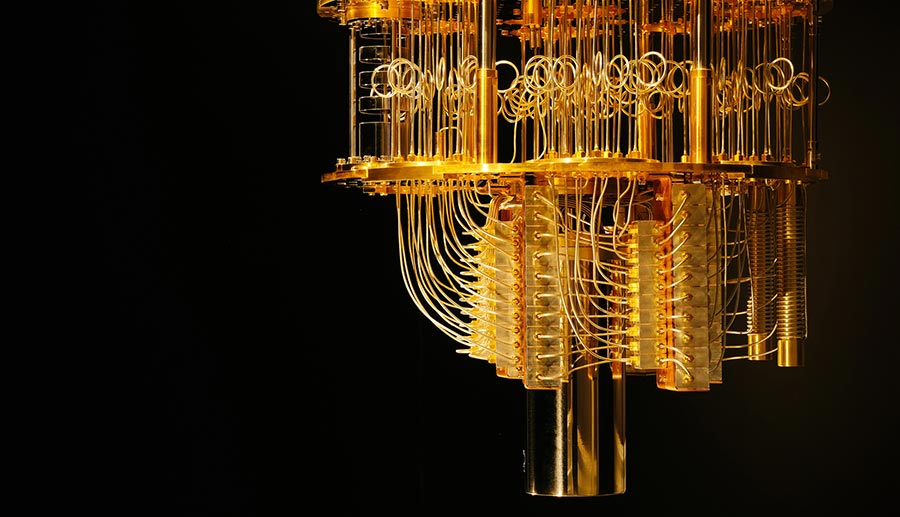

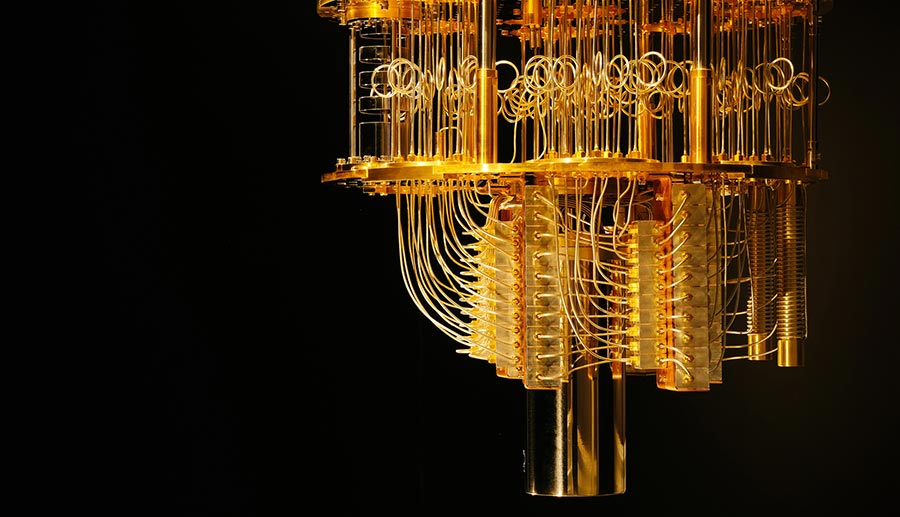

The last few stages of an IBM Q dilution refrigerator, used for cooling quantm chips to low temperatures of a few milli-Kelvin.

The last few stages of an IBM Q dilution refrigerator, used for cooling quantm chips to low temperatures of a few milli-Kelvin.

QSys: Innovating the Quantum Systems Layer

There has been great progress in quantum computing hardware, and the number of qubits per device is growing exponentially.

However, qubits are still very fragile. Transmon qubits decohere within a few microseconds, gates are plagued

by high errors, error correcting codes require orders of magnitude more qubits than available today, and many useful

quantum algorithms require even more. Algorithms and systems software can work together with the hardware to alleviate

many of the problems affecting current noisy intermediate-scale quantum hardware (NISQ), and can achieve orders-of-magnitude

more efficient quantum computation. The QSys project seeks to innovate

at the intersection of physical machines, systems software

and architecture, aiming to make quantum computing practical decades before hardware alone could achieve this goal.

As a starting point, we collaborated with researchers at Princeton University and the University of Chicago in the design of

SupermarQ,

a scalable, hardware-agnostic quantum benchmark suite which uses application-level metrics to measure performance.

The introduction of SupermarQ was motivated by the scarcity of techniques to reliably measure and compare the performance

of quantum computations running on today's quantum computer systems due to the high variety of quantum architectures and devices.

You can visit the SupermarQ webpage here

and get a brief overview of the suite in Super.Tech's SupermarQ Fundamentals white paper.

SupermarQ has already gathered significant media attention, and has been covered by

BCG,

The Quantum Insider,

HPC wire,

AWS Blog,

Quantum Computing Report,

The Quantum Observer,

The DeepTech Insider,

NewsBreak,

CB Insights' profile of IonQ,

Ex Bulletin,

Chicago Inno,

Tech Transfer Central,

Flipboard,

ivoox,

The Center for Entrepreneurship and Innovation at the University of Chicago,

HESHMore, and

the EPiQC center,

and has been featured in The Post-Quantum World podcast by protiviti,

also available in Apple Podcasts,

audible,

Spreaker and

Podcast Addict.

The paper appears in the 28th IEEE International Symposium on High-Performance Computer Architecture (HPCA-28),

where it received the Best Paper Award. Subsequently, SupermarQ received a 2023 IEEE MICRO Top Picks Honorable Mention.

QSys is a new project, and we are

looking for Ph.D. students that are passionate about contributing to

this nascent field. Students with combined physics and computer science or engineering backgrounds are especially encouraged to apply.

Constellation: Software & Hardware for Scalable Parallelism

Parallelism should be frictionless, allowing every developer to start with the assumption of parallelism

instead of being forced to take it up once performance demands it. Considerable progress has been made

in achieving this vision on the language and training front; it has been demonstrated that sophomores can

learn basic data structures and algorithms in a "parallel first" model enabled by a high-level parallel

language. However, achieving both high productivity and high performance on current and future

heterogeneous systems requires innovation throughout the hardware/software stack. This project brings two

distinct perspectives to this problem: the "theory down" approach, focusing on high-level parallel

languages and the theory and practice of achieving provable performance bounds within them; and the

"architecture up" approach, focusing on rethinking abstractions at the architectural, operating system,

runtime, and compiler levels to optimize raw performance.

Constellation

started in Fall 2021, and we are looking for Ph.D. students in

computer systems (architecture, FPGA/VLSI, operating systems, compilers) to help us turn our vision into reality.

This work is supported by NSF awards

SPX-2028851 and

SPX-2119069.

The Andromeda Galaxy (M31), the largest member of the Local Group.

The Andromeda Galaxy (M31), the largest member of the Local Group.

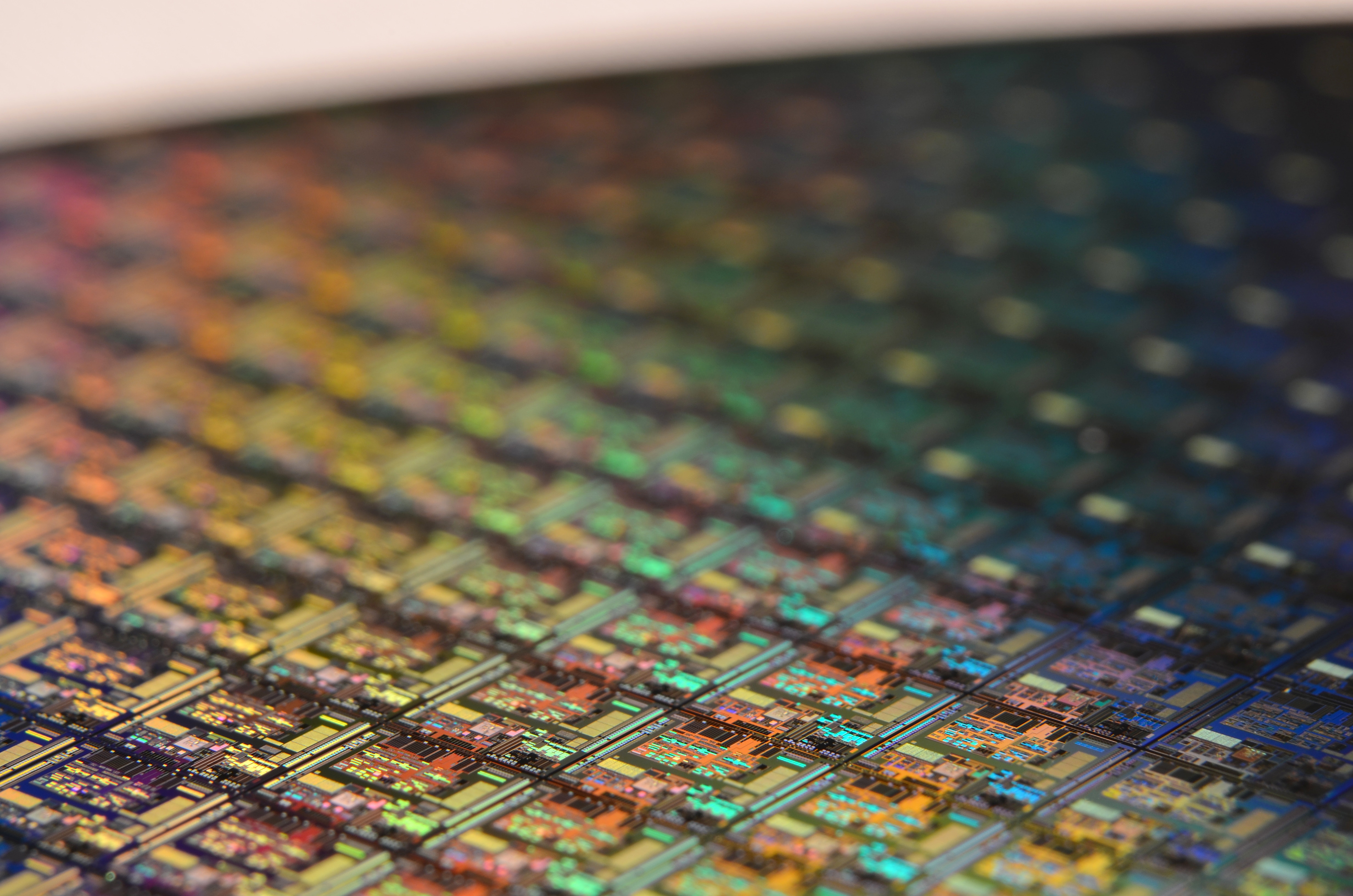

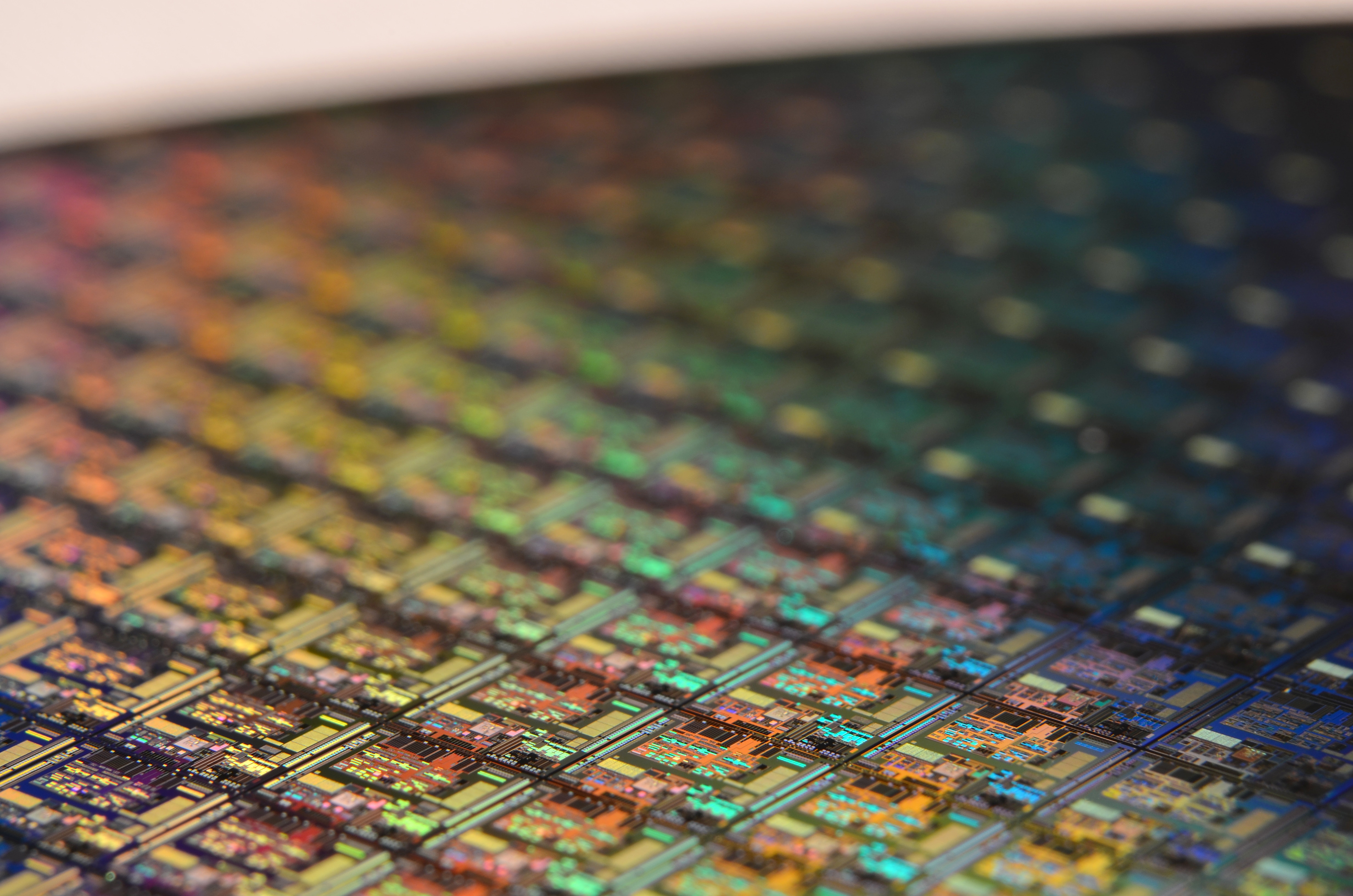

Galaxy: Computer Architecture Meets Silicon Photonics

This project combines advances in parallel computer architecture and silicon photonics

to develop architectures that break past the power, bandwidth and utilization walls (dark

silicon) that plague modern processors. The

Galaxy

architecture of optically-connected disintegrated processors argues

that instead of building monolithic chips, we should split them into several

smaller chiplets and form a "virtual macro-chip" by connecting them with optical links.

The optics allow such high bandwidth communication that break the bandwidth wall entirely,

and such low latency that the virtual macro-chip behaves as a single tightly-coupled

chip. As each chiplet has its own power budget and the optical links eliminate the traditional

chip-to-chip communication overheads, the macro-chip behaves as an oversized multicore that

scales beyond single-chip area limits, while maintaining high yield and reasonable cost

(only faulty chiplets need replacement). Our preliminary results indicate that Galaxy scales

seamlessly to 4000 cores, making it possible to shrink an entire rack's worth of computational

power onto a single wafer.

Galaxy was first proposed in WINDS 2010,

long before the industry jumped onto chiplet-based designs, and the full design was presented at an

EPFL talk

in 2014 and published at

ICS-2014.

This project has advanced the state of the art in silicon photonic interconnects by designing

a family of laser power-gating NoCs

(EcoLaser,

LaC,

EcoLaser+),

co-designing the on-chip NoC with the architecture in

ProLaser,

escalating the laser power-gating to datacenter optical networks with

SLaC and

projecting

on the datacenter energy savings, and overcoming the thermal transfer problems of

3D-stacked electro-optical processor/photonics chips with

Parka.

Even more exciting, we designed Pho$,

a multicore optical cache hierarchy that replaces all private L1/L2 caches with a single, shared,

single-cycle-access optical L1 cache. Compared to conventional all-electronic cache hierarchies,

Pho$ achieves 1.41x application speedup (4x max) and 31% lower energy-delay product (90% max).

To the best of our knowledge, Pho$ is the first practical design of an optical cache that can reach

a useful capacity (several MBs). This work was nominated for a Best Paper Award at ISLPED 2021

(slides).

We are now expanding our design to include optical phase-change memories (non volatile) for last-level caching.

A full list of publications appears in the

NSF CCF-1453853

project web page on energy-efficient and energy-proportional silicon photonic manycore architectures,

which partially funded this work.

Oak Ridge Lab's OLCF-5, a.k.a. Frontier, is the world's fastest supercomputer as of June 2022.

It achieves 1.102 quintillion operations per second, or exaFLOPS. Measuring 62.86 gigaflops/watt,

it also topped the Green500 list for most efficient supercomputer.

It sports 606,208 Zen 3 AMD cores and an additional 8,335,360 GPU cores within 74 19-inch rack cabinets,

with liquid-cooled blade nodes linked in a dragonfly topology with at most three hops between any two nodes.

Cabling is either optical or copper, customized to minimize cable length, and running at a total of 145 km.

The original design envisioned 150–500 MW of power; herculean engineering efforts lowered this to a more practical 21 MW.

Oak Ridge Lab's OLCF-5, a.k.a. Frontier, is the world's fastest supercomputer as of June 2022.

It achieves 1.102 quintillion operations per second, or exaFLOPS. Measuring 62.86 gigaflops/watt,

it also topped the Green500 list for most efficient supercomputer.

It sports 606,208 Zen 3 AMD cores and an additional 8,335,360 GPU cores within 74 19-inch rack cabinets,

with liquid-cooled blade nodes linked in a dragonfly topology with at most three hops between any two nodes.

Cabling is either optical or copper, customized to minimize cable length, and running at a total of 145 km.

The original design envisioned 150–500 MW of power; herculean engineering efforts lowered this to a more practical 21 MW.

Accelerated Collective Communication for the Exascale Era

MPI collective communication is an omnipresent communication model for HPC systems,

making up a quarter of the execution time on production systems.

The performance of a collective operation depends strongly on the algorithm used to implement it.

Unfortunately, current MPI libraries use inaccurate heuristics to select these algorithms,

and existing proposals to accelerate collectives fail to explore the interplay between modern hardware features,

such as multi-port networks, and software features, such as message size. ML-based autotuners attempt to automate

the process, but currently spend an inordinate amount of time training, rendering them impractical.

In this project we rethink MPI collective communication. We identify hardware commonalities found on exascale

systems, develop analytical models to intuit algorithm performance, and generalize common communication kernels

for modern HPC hardware. Experiments on the world’s first exascale supercomputer (Frontier at ORNL) and a

pre-exascale system (Polaris at ANL) show that our generalized, system-agnostic algorithms outperform the baseline

open-source and proprietary vendor MPI implementations by a significant margin, in some cases more than 4.5x.

In addition we identify impracticalities in modern ML autotuners and incorporate jackknife variance-based point

selection and guided sampling in our prototype active learning system. Experiments on a leadership-class supercomputer

(Theta at ANL) show significant reduction (more than 7x) in the time required to collect training data compared with

the best existing machine learning approach, making our autotuner the first practical ML collective autotuner that

can be deployed on large-scale production supercomputers.

While we continue unvestigating optimizations for near-optimal selection and tuning of MPI collectives,

we also investigate the prospects of functional memory-managed parallel languages for HPC and the automatic

management of distributed memory within such languages. This work is funded by a subcontract from Argonne National Laboratory.

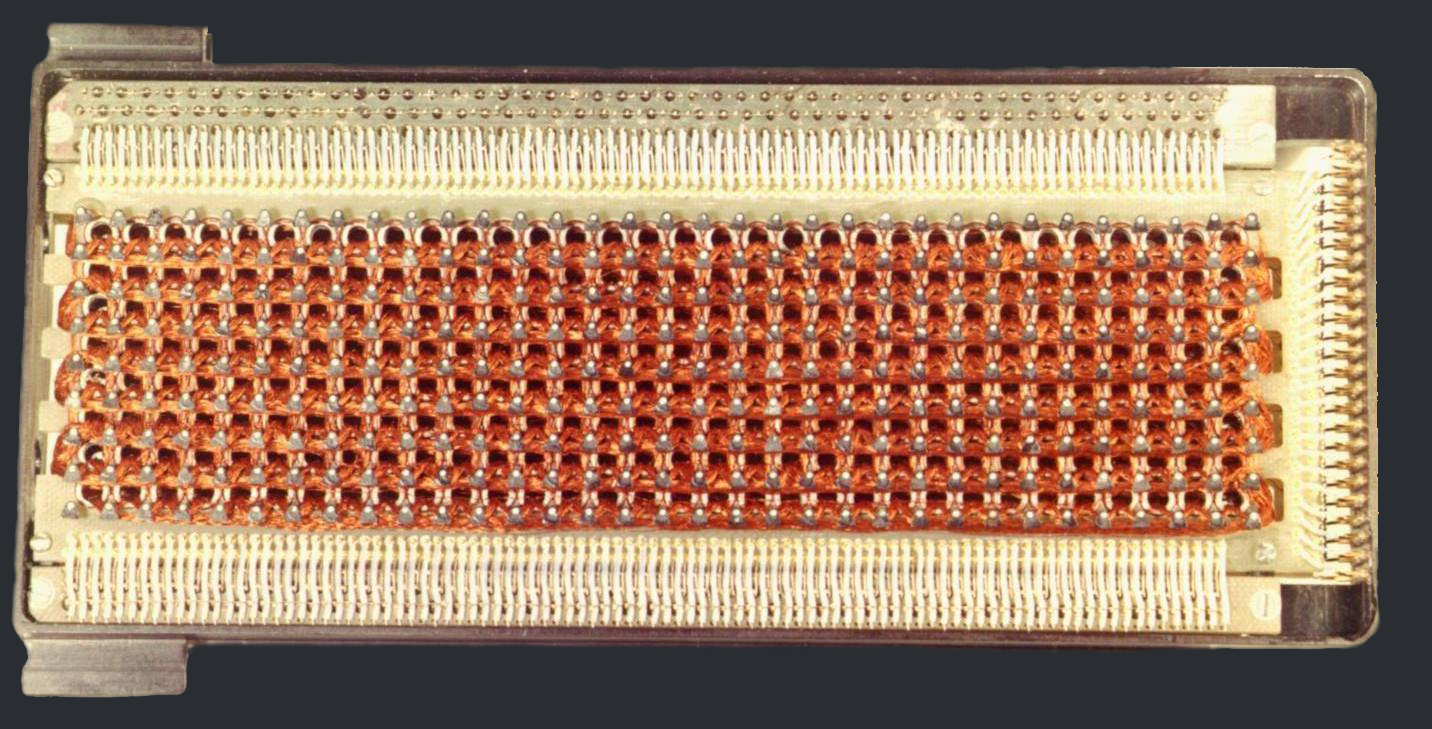

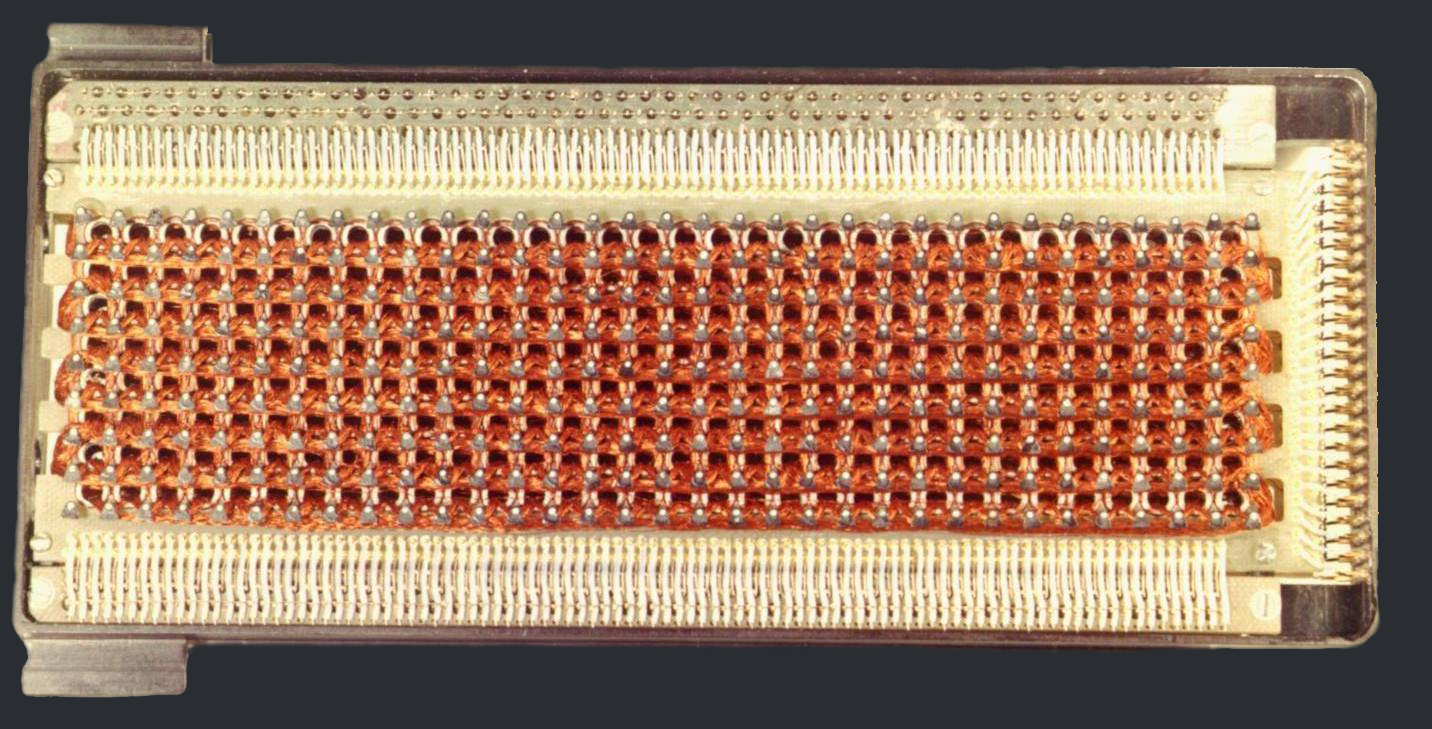

Core rope memory from the Apollo spacecraft.

By passing or not wires through a magnetic ring, knitters created 1s and 0s and hence a ROM-stored program.

This is arguably the first example of software "woven" into hardware.

Core rope memory from the Apollo spacecraft.

By passing or not wires through a magnetic ring, knitters created 1s and 0s and hence a ROM-stored program.

This is arguably the first example of software "woven" into hardware.

Interweaving the Hardware-Software Parallel Stack

The Interweaving project seeks to advance the state of the art for parallel systems.

Usually, the layers of a parallel system (compiler, runtime, operating system, and hardware)

are considered as separate entities with a rigid division of labor. This project investigates

an alternative model, Interweaving, in which these layers are integrated as needed to

improve the performance, scalability, and efficiency of the specific parallel system.

Our ROSS paper at Supercomputing 2021

presents the case for an interwoven parallel hardware/software stack.

We designed fast barriers

by blending hardware and software on an Intel HARP system that integrates x64 cores and an FPGA

fabric in the same package. We studied the prospects of

functional address translation

for parallel systems, and developed

CARAT,

a system that performs address translation as an OS/compiler co-design,

rather than a contract beteween OS and hardware, and CARAT CAKE,

a system that brings CARAT into the kernel and fully replaces OS paging via compiler/kernel cooperation.

We developed and implemented

TPAL,

a task parallel assembly language that leverages existing kernel and hardware support for

interrupts to allow parallelism to remain latent until a heartbeat (fast user-level timing interrupt),

when it can be manifested with low cost.

We discovered spatio-temporal value correlation,

an important but overlooked software behavior in which the values computed by the same line of code tend

to be of similar magnitude as the instruction repeatedly executes. We capitalized on this software

property to design ST2 GPU,

a GPU architecture that employs specialized adders for energy efficiency

(talk)

(slides).

To evaluate ST2 GPU, we developed and released as an open source framework

AccelWattch,

a highly-accurate power model for Nvidia Volta GPUs that is within 7.5% of hardware power measurements

and it is the first power modeling tool that can be driven entirely by software simulation

(e.g., Accel-Sim), or hardware performance counters, or a hybrid combination of the two

(talk)

(slides).

This work has been partially supported by

NSF CNS-1763743.

SeaFire: Application-Specific Design for Dark Silicon

While Elastic Fidelity and Elastic Memory Hierarchies cut back on the energy consumption,

they do not push the power wall far enough. To gain another order of magnitude in energy efficiency,

we must minimize the overheads of modern computing. The idea behind the

SeaFire

project is that instead of building conventional high-overhead multicores that we cannot

power, we should repurpose the dark silicon for specialized energy-efficient

cores. A running application will power up only the cores most closely matching

its computational requirements, while the rest of the chip remains off to

conserve energy. Preliminary results on SeaFire have been published at a highly-cited

IEEE Micro article

in July 2011, an invited

USENIX ;login:

article in April 2012, the

ACLD

workshop in 2010, a

keynote at ISPDC

in 2010, an invited presentation at the

NSF Workshop on Sustainable Energy-Efficient Data Management

in 2011 (the abstract is

here),

and an invited presentation at

HPTS in 2011.

This work was partially funded by an

ISEN Booster

award and later continued as part of the

Intel Parallel Computing Center at Northwestern

that I co-founded with faculty from the IEMS department.

Elastic Fidelity: Disciplined Approximate Computing

At the circuit level, the shrinking transistor geometries and race for energy-efficient

computing result in significant error rates at smaller technologies due to process variation

and low voltages (especially with near-threshold computing). Traditionally, these

errors are handled at the circuit and architectural layers, as computations

expect 100% reliability. Elastic Fidelity computing is based on the observation

that not all computations and data require 100% fidelity; we can judiciously

let errors manifest in the error-resilient data, and handle them higher in the

stack. We envision

programming language extensions

that allow data objects to be

instantiated with certain accuracy guarantees, which are recorded by the

compiler and communicated to hardware, which then steers computations and data

to separate ALU/FPU blocks and cache/memory regions that relax the guardbands

and run at lower voltage to conserve energy. Our vision was first presented at a poster in

ASPLOS 2011.

To accurately model the impact of errors we developed

b-HiVE,

a bit-level history-based error model for functional units which, for the first time,

accounts for the value correlation that is inherently found in software systems.

We then developed Lazy Pipelines,

a microarchitecture that utilizes vacant functional unit cycles to reduce computation

error rate under lower-than-nominal voltage. We showed how elastic fidelity can lead to

significant energy savings in real-world graph applications through a novel

edge importance identification

technique for graphs based on locality sensitive hashing, which allows for processing low-importance edges

with elastic fidelity operations. We further developed the concept of elastic fidelity through

Temporal Approximate Function Memoization,

a compiler transformation that replaces function executions with historical results when the function output is stable.

Our work with Elastic Fidelity also formed the stepping stone for

VaLHALLA, a variable-latency speculative

lazy adder that saves 70% of the nominal power while guaranteed correctness.

This work was partially funded by

NSF CCF-1218768 and NSF CCF-1217353.

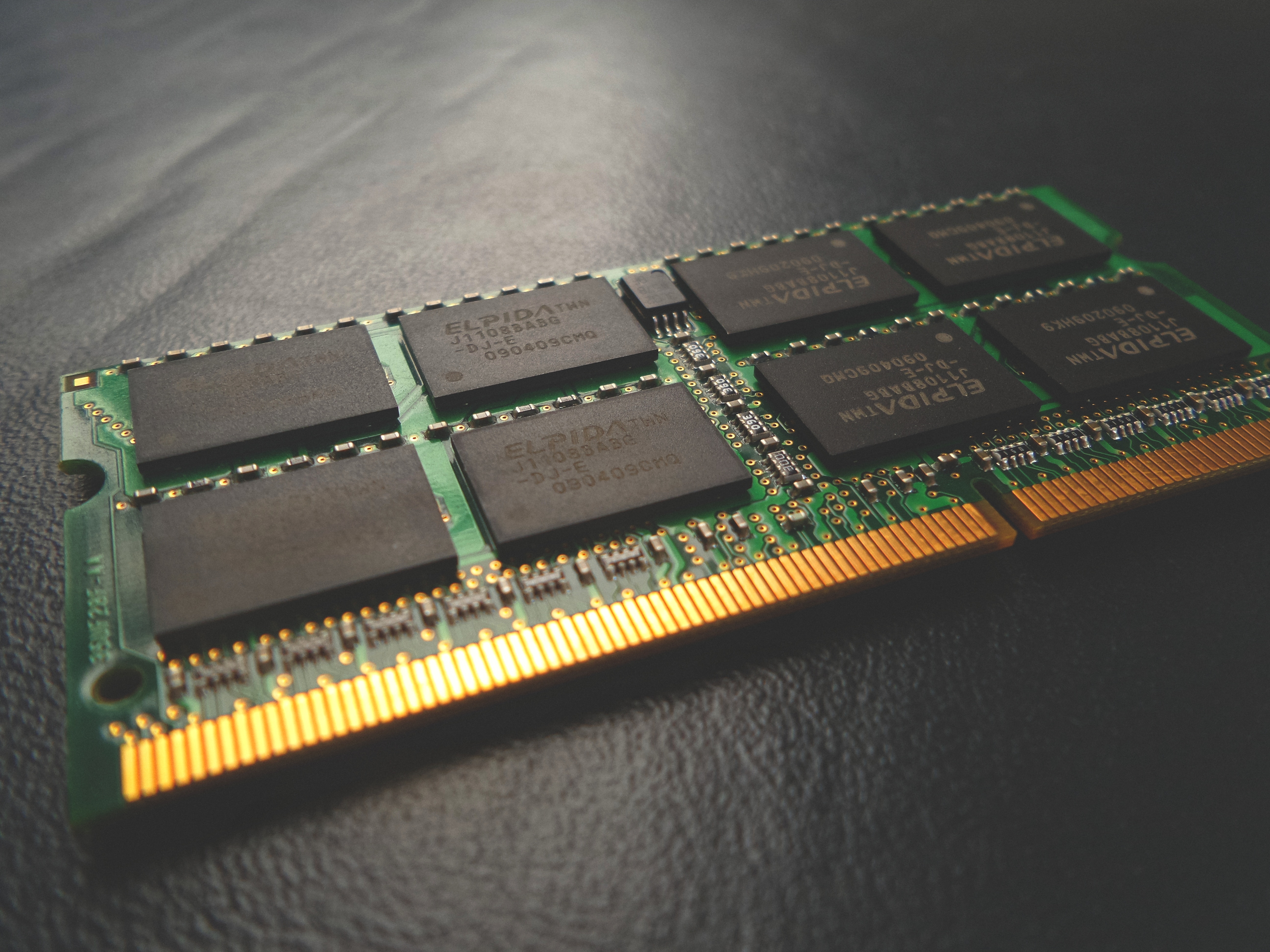

Elastic Memory Hierarchies

In this project we develop adaptive cache designs and memory hierarchy sub-systems that minimize the

overheads of storing, retrieving and communicating data to/from memories and other cores.

Reactive NUCA,

an incarnation of Elastic Memory Hierarchies for near-optimal data placement was published at

ISCA 2009 and won an

IEEE Micro Top Picks

award in 2010, while newer papers on Dynamic Directories at

DATE 2012

and

IEEE Computer Special Issue on Multicore Coherence

in 2013 present an instance of Elastic Memory Hierarchies that minimize interconnect power by co-locating directory

meta-data with sharer cores. You can also find an interview on Dynamic Directories conducted by Prof. Srini Devadas (MIT)

here.

Later, we designed SCP, an instance of Elastic Memory Hierarchies that

stores the prefetching engine's meta-data in the cache space saved by cache compression, leading to 13-22% application speedup.

Through this project we also investigated DRAM thermal management techniques, which have been largely overlooked by the community,

even though more than a third of energy is consumed on memory, and thermal events play an important role on the overall DRAM power consumption and reliability.

Together with fellow faculty Seda and Gokan Memik, we recognized the importance of the problem, and devised techniques to shape

the power and thermal profile of DRAMs using OS-level optimizations. We published some of our results on DRAM thermal management at

HPCA 2011.

This thrust currently focuses on revisiting memory hierarchy designs, optical memories, and new hardware-software

co-designs for virtual-to-physical address mapping. This work is partially funded by

NSF CCF-1218768

and

CCF-1453853.

Publications

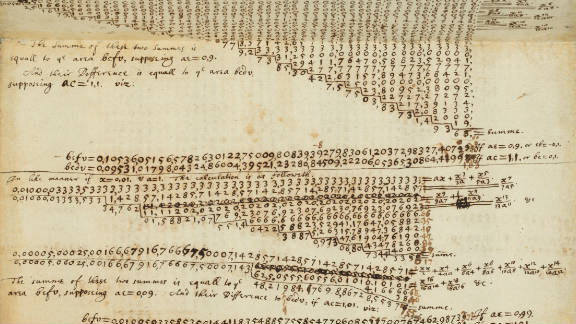

Excerpt from Isaac Newton's papers.

Excerpt from Isaac Newton's papers.

2025

QuantEM: The quantum error management compiler.

Ji Liu, Quinn Langfitt, Mingyoung Jessica Jeng, Alvin Gonzales, Noble Agyeman-Bobie, Kaiya Jones,

Siddharth Vijaymurugan, Daniel Dilley, Zain H. Saleem, Nikos Hardavellas, and Kaitlin N. Smith.

arXiv Quantum Physics (quant-ph) arXiv:2509.15505, September 2025.

Improved Prefetching Techniques for Linked Data Structures.

Nikola Vuk Maruszewski.

M.S. Thesis, Northwestern University, Technical Report NU-CS-2025-05,

Evanston, IL, June 2025.

DOI: https://doi.org/10.21985/n2-bsav-a158

Also, arXiv Hardware Architecture (cs.AR) arXiv:2505.21669, June 2025.

Modular Compilation for Quantum Chiplet Architectures.

Mingyoung Jessica Jeng, Nikola Vuk Maruszewski, Connor Selna, Michael Gavrincea, Kaitlin N. Smith, and Nikos Hardavellas.

arXiv Quantum Physics (quant-ph) arXiv:2501.08478, January 2025.

Media coverage:

2024

Dynamic Resource Allocation with Quantum Error Detection.

Quinn Langfitt, Alvin Gonzales, Joshua Gao, Ji Liu, Zain H. Saleem, Nikos Hardavellas, and Kaitlin N. Smith.

arXiv Quantum Physics (quant-ph) arXiv:2408.05565, December 2024.

Pauli Check Sandwiching for Quantum Characterization and Error Mitigation during Runtime.

Joshua Gao, Ji Liu, Alvin Gonzales, Zain Saleem, Nikos Hardavellas, and Kaitlin Smith.

NSF Workshop on Quantum Operating Systems and Real-Time Control (QuantumOS),

held in conjunction with the 30th Annual ACM Symposium on Operating Systems Principles (SOSP)

and the 57th IEEE/ACM International Symposium on Microarchitecture (MICRO),

Austin, Texas, November 2024.

Pauli Check Extrapolation for Quantum Error Mitigation.

Quinn Langfitt, Ji Liu, Benchen Huang, Alvin Gonzales, Kaitlin N. Smith, Nikos Hardavellas, and Zain H. Saleem.

In Proceedings of the IEEE International Conference on Quantum Computing and Engineering (QCE), Montréal, Québec, Canada, September 2024.

Also, arXiv Quantum Physics (quant-ph) arXiv:2406.14759, June 2024.

Pauli Check Sandwiching for Quantum Characterization and Error Mitigation during Runtime.

Joshua Gao, Ji Liu, Alvin Gonzales, Zain H. Saleem, Nikos Hardavellas, and Kaitlin N. Smith.

In Proceedings of the IEEE International Conference on Quantum Computing and Engineering (QCE), Montréal, Québec, Canada, September 2024.

Also, arXiv Quantum Physics (quant-ph) arXiv:2408.05565v3, August 2024.

On Transparent Optimizations for Communication in Highly Parallel Systems.

Michael Wilkins.

Ph.D. Thesis, Northwestern University, Technical Report NU-CS-2024-01,

Evanston, IL, March 2024.

2023

Uncovering Latent Hardware/Software Parallelism.

Vijay Kandiah.

Ph.D. Thesis, Northwestern University, Technical Report NU-CS-2023-14,

Evanston, IL, November 2023.

Generalized Collective Algorithms for the Exascale Era.

Michael Wilkins, Hanming Wang, Peizhi Liu, Bangyen Pham, Yanfei Guo, Rajeev Thakur, Nikos Hardavellas and Peter Dinda.

In the IEEE International Conference on Cluster Computing (IEEE Cluster),

Santa Fe, New Mexico, November 2023.

Evaluating Functional Memory-Managed Parallel Languages for HPC using the NAS Parallel Benchmarks.

Michael Wilkins, Garrett Weil, Luke Arnold, Nikos Hardavellas and Peter Dinda.

In the 28th International Workshop on High-Level Parallel Programming Models and Supportive Environments (HIPS),

held in conjunction with the 37th IEEE International Parallel and Distributed Processing Symposium (IPDPS),

St. Petersburg, Florida, May 2023.

Parsimony: Enabling SIMD/Vector Programming in Standard Compiler Flows.

Vijay Kandiah, Daniel Lustig, Oreste Villa, David Nellans and Nikos Hardavellas.

In Proceedings of the IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Montreal, Canada, February 2023.

WARDen: Specializing Cache Coherence for High-Level Parallel Languages.

Michael Wilkins, Sam Westrick, Vijay Kandiah, Alex Bernat, Brian Suchy, Enrico Armenio Deiana, Simone Campanoni, Umut Acar, Peter Dinda and Nikos Hardavellas.

In Proceedings of the IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Montreal, Canada, February 2023.

Program State Element Characterization.

Enrico Armenio Deiana, Brian Suchy, Michael Wilkins, Brian Homerding, Tommy McMichen, Katarzyna Dunajewski, Peter Dinda, Nikos Hardavellas and Simone Campanoni.

In Proceedings of the IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Montreal, Canada, February 2023.

2022

ACCLAiM: Advancing the Practicality of MPI Collective Communication Autotuning Using Machine Learning.

Michael Wilkins, Yanfei Guo, Rajeev Thakur, Peter Dinda and Nikos Hardavellas.

In Proceedings of the IEEE International Conference on Cluster Computing (IEEE Cluster), Heidelberg, Germany, September 2022.

Computing With an All-Optical Cache Hierarchy Using Optical Phase Change Memory as Last Level Cache.

Haiyang Han, Theoni Alexoudi, Chris Vagionas, Nikos Pleros and Nikos Hardavellas.

In Proceedings of the European Conference on Optical Communications (ECOC), Bordeaux, France, September 2022.

High-performance and Energy-efficient Computing Systems Using Photonics.

Haiyang Han.

Ph.D. Thesis, Northwestern University, Technical Report NU-CS-2022-07,

Evanston, IL, May 2022.

A Practical Shared Optical Cache with Hybrid MWSR/R-SWMR NoC for Multicore Processors.

Haiyang Han, Theoni Alexoudi, Chris Vagionas, Nikos Pleros and Nikos Hardavellas.

In ACM Journal on Emerging Technologies in Computing Systems (ACM JETC), 2022.

CARAT CAKE: Replacing Paging via Compiler/Kernel Cooperation.

Brian Suchy, Souradip Ghosh, Aaron Nelson, Zhen Huang, Drew Kersnar, Siyuan Chai, Michael Cuevas,

Alex Bernat, Gaurav Chaudhary, Nikos Hardavellas, Simone Campanoni, and Peter Dinda.

In Proceedings of the 2022 Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), Lausanne, Switzerland, March 2022.

SupermarQ: A Scalable Quantum Benchmark Suite.

Teague Tomesh, Pranav Gokhale, Victory Omole, Gokul Subramanian Ravi, Kaitlin Smith, Joshua Viszlai, Xin-Chuan Wu, Nikos Hardavellas, Margaret R. Martonosi and Fred Chong.

In Proceedings of the 28th IEEE International Symposium on High-Performance Computer Architecture (HPCA), Seoul, South Korea, February 2022.

Also available on arXiv Quantum Physics (quant-ph) and Hardware Architecture (cs.AR).

Best Paper Award.

IEEE MICRO Top Picks Honorable Mention, 2023.

- SupermarQ webpage.

- SupermarQ Fundamentals white paper.

- Media coverage:

BCG,

The Quantum Insider,

HPC wire,

AWS Blog,

Quantum Computing Report,

The Quantum Observer,

The DeepTech Insider,

NewsBreak,

CB Insights' profile of IonQ,

Ex Bulletin,

Chicago Inno,

Tech Transfer Central,

Flipboard,

ivoox,

The Center for Entrepreneurship and Innovation at the University of Chicago,

HESHMore, and

the EPiQC center.

- Full-feature podcast: The Post-Quantum World by protiviti,

also available in

audible,

Apple Podcasts,

Spreaker and

Podcast Addict.

- SupermarQ Zenodo DOI for the HPCA-28 artifact.

- SupermarQ GitHub link for the latest version of the SupermarQ sources.

2021

Public Release and Validation of SPEC CPU2017 PinPoints.

Haiyang Han and Nikos Hardavellas.

arXiv Performance (cs.PF); Hardware Architecture (cs.AR) preprint arXiv:2112.06981, December 2021.

Energy-Proportional Data Center Network Architecture Through OS, Switch and Laser Co-design.

Haiyang Han, Nikos Terzenidis, Dimitris Syrivelis, Arash F. Beldachi, George T. Kanellos, Yigit Demir, Jie Gu, Srikanth Kandula, Nikos Pleros, Fabián Bustamante and Nikos Hardavellas.

arXiv Networking and Internet Architecture (cs.NI); Hardware Architecture (cs.AR) preprint arXiv:2112.02083, December 2021.

ST2 GPU: An Energy-Efficient GPU Design with Spatio-Temporal Shared-Thread Speculative Adders.

Vijay Kandiah, Ali Murat Gok, Georgios Tziantzioulis and Nikos Hardavellas.

In Proceedings of the Design Automation Conference (DAC), San Francisco, CA, December 2021

(talk)

(slides).

A FACT-based Approach: Making ML Collective Autotuning Feasible on Exascale Systems.

Michael Wilkins, Yanfei Guo, Rajeev Thakur, Nikos Hardavellas, Peter Dinda and Min Si.

In Proceedings of the 2021 Workshop on Exascale MPI (ExaMPI),

held in conjunction with Supercomputing 2021, the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC),

St. Louis, November 2021.

The Case for an Interwoven Parallel Hardware/Software Stack.

Kyle Hale, Simone Campanoni, Nikos Hardavellas and Peter Dinda.

In Proceedings of the 10th International Workshop on Runtime and Operating Systems for Supercomputers (ROSS),

held in conjunction with Supercomputing 2021, the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC),

St. Louis, November 2021.

AccelWattch: A Power Modeling Framework for Modern GPUs.

Vijay Kandiah, Scott Peverelle, Mahmoud Khairy, Junrui Pan, Amogh Manjunath, Timothy G. Rogers, Tor M. Aamodt and Nikos Hardavellas.

In Proceedings of the 54th IEEE/ACM International Symposium on Microarchitecture (MICRO), Athens, Greece, October 2021.

(talk video)

(slides)

- AccelWattch Software and Dataset (MICRO 2021 artifact):

AccelWattch is a microbenchmark-based quadratic programming framework for the power modeling of GPUs, and an accurate power model for NVIDIA Quadro Volta GV100.

The artifact includes source code for AccelWattch and the entire suite of tuning microbenchmarks, pre-compiled binaries, input data, instruction traces, scripts, xls files,

and step-by-step instructions to reproduce the key results in the AccelWattch MICRO 2021 paper.

- AccelWattch GitHub link for the latest version of the AccelWattch sources and framework integrated into Accel-Sim, including microbenchmarks and validation benchmarks.

Pho$: A Case for Shared Optical Cache Hierarchies.

Haiyang Han, Theoni Alexoudi, Chris Vagionas, Nikos Pleros and Nikos Hardavellas.

In Proceedings of the ACM/IEEE International Symposium on Low Power Electronics and Design (ISLPED), July 2021

(slides).

Nominated for Best Paper Award.

Task Parallel Assembly Language for Uncompromising Parallelism.

Mike Rainey, Ryan R. Newton, Kyle Hale, Nikos Hardavellas, Simone Campanoni, Peter Dinda and Umut A. Acar.

In Proceedings of the 42nd ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI), June 2021.

2020

CARAT: A Case for Virtual Memory through Compiler- and Runtime-based Address Translation.

Brian Suchy, Simone Campanoni, Nikos Hardavellas and Peter Dinda.

In Proceedings of the 41st ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI), London, UK, June 2020.

A Simulator for Distributed Quantum Computing.

Gaurav Chaudhary.

M.S. Thesis, Northwestern University, Technical Report NU-CS-2020-15,

Evanston, IL, December 2020.

2019

Prospects for Functional Address Translation.

Conor Hetland, Georgios Tziantzioulis, Brian Suchy, Kyle Hale, Nikos Hardavellas and Peter Dinda.

In Proceedings of the 27th IEEE International Symposium on the Modeling, Analysis, and Simulation of Computer and Telecommunication Systems (MASCOTS),

Rennes, France, October 2019.

Paths to Fast Barrier Synchronization on the Node.

Conor Hetland, Georgios Tziantzioulis, Brian Suchy, Mike Leonard, Jin Han, John Albers, Nikos Hardavellas and Peter Dinda.

In Proceedings of the 28th International Symposium on High-Performance Parallel and Distributed Computing (HPDC), Phoenix, Arizona, June 2019.

2018

Energy-Efficient Computing through Approximate Arithmetic.

Ali Murat Gok.

Ph.D. Thesis, Northwestern University,

Evanston, IL, December 2018.

Temporal Approximate Function Memoization.

Georgios Tziantzioulis, Nikos Hardavellas and Simone Campanoni

IEEE Micro, Special Issue on Approximate Computing, Vol. 38(4), pp. 60-70, July/August 2018.

Unconventional Parallelization of Nondeterministic Applications.

Enrico A. Deiana, Vincent St-Amour, Peter Dinda, Nikos Hardavellas and Simone Campanoni.

In Proceedings of the 23rd ACM International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS),

Williamsburg, VA, March 2018.

Operator-Level Parallelism.

Nikos Hardavellas and Ippokratis Pandis.

Encyclopedia of Database Systems,

2nd edition, L. Liu and M. T. Ozsu (Eds.), ISBN 978-1-4899-7993-3, Springer, 2018.

Execution Skew.

Nikos Hardavellas and Ippokratis Pandis.

Encyclopedia of Database Systems,

2nd edition, L. Liu and M. T. Ozsu (Eds.), ISBN 978-1-4899-7993-3, Springer, 2018.

Inter-Query Parallelism.

Nikos Hardavellas and Ippokratis Pandis.

Encyclopedia of Database Systems,

2nd edition, L. Liu and M. T. Ozsu (Eds.), ISBN 978-1-4899-7993-3, Springer, 2018.

Intra-Query Parallelism.

Nikos Hardavellas and Ippokratis Pandis.

Encyclopedia of Database Systems,

2nd edition, L. Liu and M. T. Ozsu (Eds.), ISBN 978-1-4899-7993-3, Springer, 2018.

Stop-&-Go Operator.

Nikos Hardavellas and Ippokratis Pandis.

Encyclopedia of Database Systems,

2nd edition, L. Liu and M. T. Ozsu (Eds.), ISBN 978-1-4899-7993-3, Springer, 2018.

2017

Harnessing Approximation for Energy- and Power-Efficient Computing.

Georgios Tziantzioulis.

Ph.D. Thesis, Northwestern University,

Evanston, IL, September 2017.

POSTER: The Liberation Day of Nondeterministic Programs.

Enrico A. Deiana, Vincent St-Amour, Peter Dinda, Nikos Hardavellas and Simone Campanoni.

In Proceedings of the 26th International Conference on Parallel Architectures and Compilation Techniques (PACT), Portland, OR, September 2017.

VaLHALLA: Variable Latency History Aware Local-carry Lazy Adder.

Ali Murat Gok and Nikos Hardavellas.

In Proceedings of the 27th ACM Great Lakes Symposium on VLSI (GLSVLSI), Banff, Alberta, Canada, May 2017.

Harnessing Path Divergence for Laser Control in Data Center Networks.

Yigit Demir, Nikos Terzenidis, Haiyang Han, Dimitris Syrivelis, George T. Kanellos, Nikos Hardavellas, Nikos Pleros, Srikanth Kandula and Fabian Bustamante.

In Proceedings of the 2017 IEEE Photonics Society Summer Topical Meeting Series (IEEE SUM), Optical Switching Technologies for Datacom and Computercom Applications (OSDC), San Juan, Puerto Rico, July 2017.

Invited Paper.

Energy Proportional Photonic Interconnects.

Yigit Demir and Nikos Hardavellas.

In 12th International Conference on High Performance and Embedded Architectures and Compilers (HiPEAC), Stockholm, Sweden, January 2017.

Techniques for Energy Proportionality in Optical Interconnects.

Yigit Demir and Nikos Hardavellas.

Photonic Interconnects for Computing Systems, G. Nicolescu, S. Le Beux, M. Nikdast and J. Xu (Eds.), The River Publishers' Series in Optics and Photonics, River Publishers, 2017.

2016

Evaluation of K-Means Data Clustering Algorithm on Intel Xeon Phi.

S. Lee, W.-k. Liao, A. Agrawal, N. Hardavellas and A. Choudhary.

In Proceedings of the 3rd Workshop on Advances in Software and Hardware for Big Data to Knowledge Discovery (ASH), co-located with the IEEE Conference on Big Data (IEEE BigData), Washington, D.C., December 5-8, 2016.

Energy Proportional Photonic Interconnects.

Y. Demir and N. Hardavellas.

In ACM Transactions on Architecture and Code Optimization (ACM TACO), Vol. 13(5), December 2016.

SLaC: Stage Laser Control for a Flattened Butterfly Network.

Y. Demir and N. Hardavellas.

In Proceedings of the 22nd IEEE International Symposium on High Performance Computer Architecture (HPCA), Barcelona, Spain, March 2016.

Lazy Pipelines: Enhancing Quality in Approximate Computing.

G. Tziantzioulis, A. M. Gok, S M Faisal, N. Hardavellas, S. Ogrenci-Memik and S. Parthasarathy.

In Proceedings of the Design, Automation, and Test in Europe (DATE), Dresden, Germany, March 2016.

Towards Energy-Proportional Optical Interconnects.

Y. Demir and N. Hardavellas.

In Proceedings of the 2nd International Workshop on Optical/Photonic Interconnects for Computing Systems (OPTICS), Dresden, Germany, March 2016.

Invited Paper.

2015

Edge Importance Identification for Energy Efficient Graph Processing.

S. M. Faisal, G. Tziantzioulis, A. M. Gok, S. Parthasarathy, N. Hardavellas and S. Ogrenci-Memik.

In Proceedings of the 2015 IEEE International Conference on Big Data (IEEE BigData), Santa Clara, CA, October 2015.

SCP: Synergistic Cache Compression and Prefetching.

B. Patel, G. Memik and N. Hardavellas.

In Proceedings of the 33rd IEEE International Conference on Computer Design (ICCD), New York City, NY, October 2015.

Parka: Thermally Insulated Nanophotonic Interconnects.

Y. Demir and N. Hardavellas.

In Proceedings of the 9th International Symposium on Networks-on-Chip (NOCS), Vancouver, Canada, September 2015.

High Performance and Energy Efficient Computer System Design Using Photonic Interconnects.

Yigit Demir.

Ph.D. Thesis, Northwestern University,

Evanston, IL, August 2015.

b-HiVE: A Bit-Level History-Based Error Model with Value Correlation for Voltage-Scaled Integer and Floating Point Units.

G. Tziantzioulis, A. M. Gok, S. M. Faisal, N. Hardavellas, S. Memik and S. Parthasarathy.

In Proceedings of the Design Automation Conference (DAC), San Francisco, CA, June 2015.

- Software: SoftInj, a software fault injection library that implements the b-HiVE error models.

- Dataset: b-HiVE Hardware Characterization Dataset,

a raw dataset of full-analog HSIM and SPICE simulations of industrial-strength 64-bit integer ALUs, integer multipliers, bitwise logic operations,

FP adders, FP multipliers, and FP dividers from OpenSparc T1 across voltage domains, along with controlled value correlation experiments (2015).

Towards Energy-Efficient Photonic Interconnects.

Y. Demir and N. Hardavellas.

In Proceedings of SPIE, Optical Interconnects XV, San Francisco, CA, February 2015.

Also selected to appear in SPIE Green Photonics.

2014

LaC: Integrating Laser Control in a Photonic Interconnect.

Y. Demir and N. Hardavellas.

In Proceedings of the IEEE Photonics Conference (IPC), pp. 28-29, La Jolla, CA, October 2014.

EcoLaser: An Adaptive Laser Control for Energy-Efficient On-Chip Photonic Interconnects.

Y. Demir and N. Hardavellas.

In Proceedings of the International Symposium on Low Power Electronics and Design (ISLPED), pp. 3-8, La Jolla, CA, August 2014.

Galaxy: A High-Performance Energy-Efficient Multi-Chip Architecture Using Photonic Interconnects.

Y. Demir, Y. Pan, S. Song, N. Hardavellas, G. Memik and J. Kim.

In Proceedings of the ACM International Conference on Supercomputing (ICS), pp. 303-312, Munich, Germany, June 2014.

LaC: Integrating Laser Control in a Photonic Interconnect.

Y. Demir and N. Hardavellas.

Technical Report NU-EECS-14-03, Northwestern University, Evanston, IL, April 2014.

EcoLaser: An Adaptive Laser Control for Energy Efficient On-Chip Photonic Interconnects.

Y. Demir and N. Hardavellas.

Technical Report NU-EECS-14-02, Northwestern University, Evanston, IL, April 2014.

2013

The Impact of Dynamic Directories on Multicore Interconnects.

M. Schuchhardt, A. Das, N. Hardavellas, G. Memik and A. Choudhary.

IEEE Computer, Special Issue on Multicore Memory Coherence, Vol. 46(10), pp. 32-39, October 2013.

Galaxy: A High-Performance Energy-Efficient Multi-Chip Architecture Using Photonic Interconnects

Y. Demir, Y. Pan, S. Song, N. Hardavellas, J. Kim and G. Memik.

Technical Report NU-EECS-13-08, Northwestern University, Evanston, IL, July 2013.

2012

Towards a Schlieren Camera.

B. Pattabiraman, R. Morton, A. Grabenhofer, N. Hardavellas, J. Tumblin and V. Gopal.

In 8th Annual Mid-West Graphics Workshop (MIDGRAPH), Chicago, IL, December 2012.

Load Balancing for Processing Spatio-Temporal Queries in Multi-Core Settings.

A. Yaagoub, G. Trajcevski, P. Scheuermann and N. Hardavellas.

In 11th International ACM Workshop on Data Engineering for Wireless and Mobile Access (MobiDE),

co-located with ACM SIGMOD International Conference on Management of Data and

ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems (ACM SIGMOD/PODS), Scottsdale, AZ, May 2012.

The Rise and Fall of Dark Silicon

N. Hardavellas.

USENIX ;login:, Vol. 37, No. 2, pp. 7-17, April 2012.

Invited Paper.

Dynamic Directories: Reducing On-Chip Interconnect Power in Multicores.

A. Das, M. Schuchhardt, N. Hardavellas, G. Memik and A. Choudhary.

In Proceedings of Design, Automation, and Test in Europe (DATE), pp. 479-484, Dresden, Germany, March 2012.

2011

Elastic Fidelity: Trading-off Computational Accuracy for Energy Reduction.

S. Roy, T. Clemons, S. M. Faisal, K. Liu, N. Hardavellas and S. Parthasarathy.

Technical Report NWU-EECS-11-02, Northwestern University, Evanston, IL, February 2011.

Also on arXiv Hardware Architecture (cs.AR) preprint arXiv:1111.4279, November 2011.

Toward Dark Silicon in Servers.

N. Hardavellas, M. Ferdman, B. Falsafi and A. Ailamaki.

IEEE Micro, Special Issue on Big Chips, Vol. 31(4), pp. 6-15, July/August 2011.

Also, IEEE Micro Spotlight Paper at Computing Now, February 2012.

Exploiting Dark Silicon for Energy Efficiency

N. Hardavellas.

NSF Workshop on Sustainable Energy-Efficient Data Management (SEEDM),

National Science Foundation, Arlington, VA, USA, May 2011.

(slides)

Elastic Fidelity: Trading-off Computational Accuracy for Energy Reduction.

S. Roy, T. Clemons, S. M. Faisal, K. Liu, N. Hardavellas and S. Parthasarathy.

In 16th International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), Newport Beach, California, March 2011 (poster).

Hardware/Software Techniques for DRAM Thermal Management.

S. Liu, B. Leung, A. Neckar, S. Ogrenci-Memik, G. Memik and N. Hardavellas.

In Proceedings of the 17th IEEE International Symposium on High Performance Computer Architecture (HPCA), pp. 479-484, San Antonio, Texas, February 2011.

2010

PAD: Power-Aware Directory Placement in Distributed Caches.

A. Das, M. Schuchhardt, N. Hardavellas, G. Memik and A. Choudhary.

Technical Report NWU-EECS-10-11, Northwestern University, Evanston, IL, December 2010.

Exploring Benefits and Designs of Optically-Connected Disintegrated Processor Architecture.

Y. Pan, Y. Demir, N. Hardavellas, J. Kim and G. Memik.

In Workshop on the Interaction between Nanophotonic Devices and Systems (WINDS),

co-located with the 43rd International Symposium on Microarchitecture (MICRO), Atlanta, GA, December 2010.

(slides)

Data-Oriented Transaction Execution.

I. Pandis, R. Johnson, N. Hardavellas and A. Ailamaki.

Proceedings of the VLDB Endowment (PVLDB), Vol. 3(1), pp. 928-939, August 2010.

Data-Oriented Transaction Execution.

I. Pandis, R. Johnson, N. Hardavellas and A. Ailamaki.

9th Hellenic Data Management Symposium (HDMS), Ayia Napa, Cyprus, July 2010.

The Path Forward: Specialized Computing in the Datacenter.

N. Hardavellas, M. Ferdman, A. Ailamaki and B. Falsafi.

In 2nd Workshop on Architectural Considerations for Large Datacenters (ACLD),

co-located with the 37th ACM/IEEE Annual International Symposium on Computer Architecture (ISCA), Saint-Malo, France, June 2010.

Power Scaling: the Ultimate Obstacle to 1K-Core Chips.

N. Hardavellas, M. Ferdman, A. Ailamaki and B. Falsafi.

Technical Report NWU-EECS-10-05, Northwestern University, Evanston, IL, March 2010.

Near-Optimal Cache Block Placement with Reactive Nonuniform Cache Architectures.

N. Hardavellas, M. Ferdman, B. Falsafi and A. Ailamaki.

IEEE Micro, Vol. 30(1), pp. 20-28, January/February 2010.

IEEE Micro Top Picks from Computer Architecture Conferences.

Data-Oriented Transaction Execution.

I. Pandis, R. Johnson, N. Hardavellas and A. Ailamaki.

Technical Report CMU-CS-10-101, Computer Science Department, Carnegie Mellon University, Pittsburgh, PA, January 2010.

2009

Reactive NUCA: Near-Optimal Block Placement and Replication in Distributed Caches.

N. Hardavellas, M. Ferdman, B. Falsafi and A. Ailamaki.

In Proceedings of the 36th ACM/IEEE Annual International Symposium on Computer Architecture (ISCA), pp. 184-195, Austin, TX, June 2009.

IEEE Micro Top Picks.

Shore-MT: A Scalable Storage Manager for the Multicore Era.

R. Johnson, I. Pandis, N. Hardavellas, A. Ailamaki and B. Falsafi.

In Proceedings of the 12th International Conference on Extending Database Technology (EDBT), pp. 24-35, Saint-Petersburg, Russia, March 2009.

Test-of-Time Award, 2019.

Software: Shore-MT, a scalable storage manager for the multicore era.

Operator-Level Parallelism.

N. Hardavellas and I. Pandis.

Encyclopedia of Database Systems,

pp. 1981-1985, L. Liu and M. T. (Eds.), ISBN 978-0-387-35544-3, Springer, 2009.

Execution Skew.

N. Hardavellas and I. Pandis.

Encyclopedia of Database Systems,

pp. 1079, L. Liu and M. T. (Eds.), ISBN 978-0-387-35544-3, Springer, 2009.

Inter-Query Parallelism.

N. Hardavellas and I. Pandis.

Encyclopedia of Database Systems,

pp. 1566-1567, L. Liu and M. T. (Eds.), ISBN 978-0-387-35544-3, Springer, 2009.

Intra-Query Parallelism.

N. Hardavellas and I. Pandis.

Encyclopedia of Database Systems,

pp. 1567-1568, L. Liu and M. T. (Eds.), ISBN 978-0-387-35544-3, Springer, 2009.

Stop-and-Go Operator.

N. Hardavellas and I. Pandis.

Encyclopedia of Database Systems,

pp. 2794, L. Liu and M. T. (Eds.), ISBN 978-0-387-35544-3, Springer, 2009.

2008

R-NUCA: Data Placement in Distributed Shared Caches.

N. Hardavellas, M. Ferdman, B. Falsafi and A. Ailamaki.

Technical Report CALCM-TR-2008-001, Computer Architecture Lab, Carnegie Mellon University, Pittsburgh, PA, December 2008.

Shore-MT: A Quest for Scalability in the Many-Core Era.

R. Johnson, I. Pandis, N. Hardavellas and A. Ailamaki.

Technical Report CMU-CS-08-114, Computer Science Department, Carnegie Mellon University, Pittsburgh, PA, 2008.

To Share Or Not To Share?.

R. Johnson, N. Hardavellas, I. Pandis, N. Mancheril, S. Harizopoulos, K. Sabirli, A. Ailamaki and B. Falsafi.

7th Hellenic Data Management Symposium (HDMS), Heraklion, Crete, Greece, July 2008.

2007

Multi-bit Error Tolerant Caches Using Two-Dimensional Error Coding.

J. Kim, N. Hardavellas, K. Mai, B. Falsafi and J. C. Hoe.

In Proceedings of the 40th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), pp. 197-209, Chicago, IL, December 2007.

To Share Or Not To Share?.

R. Johnson, N. Hardavellas, I. Pandis, N. Mancheril, S. Harizopoulos, K. Sabirli, A. Ailamaki and B. Falsafi.

In Proceedings of the 33rd International Conference on Very Large Data Bases (VLDB), pp. 351-362, Vienna, Austria, September 2007.

An Analysis of Database System Performance on Chip Multiprocessors.

N. Hardavellas, I. Pandis, R. Johnson, N. Mancheril, S. Harizopoulos, A. Ailamaki and B. Falsafi.

6th Hellenic Data Management Symposium (HDMS), Athens, Greece, July 2007.

Scheduling Threads for Constructive Cache Sharing on CMPs.

S. Chen, P. B. Gibbons, M. Kozuch, V. Liaskovitis, A. Ailamaki, G. E. Blelloch, B. Falsafi, L. Fix, N. Hardavellas, T. C. Mowry and C. Wilkerson.

In Proceedings of the 19th Annual ACM Symposium on Parallelism in Algorithms and Architectures (SPAA), pp. 105-115, San Diego, CA, June 2007.

Database Servers on Chip Multiprocessors: Limitations and Opportunities.

N. Hardavellas, I. Pandis, R. Johnson, N. Mancheril, A. Ailamaki and B. Falsafi.

In Proceedings of the 3rd Biennial Conference on Innovative Data Systems Research (CIDR), pp. 79-87, Asilomar, CA, January 2007.

2006

An Analysis of Database System Performance on Chip Multiprocessors.

N. Hardavellas, I. Pandis, R. Johnson, N. Mancheril, S. Harizopoulos, A. Ailamaki and B. Falsafi.

Technical Report CMU-CS-06-153, Computer Science Department, Carnegie Mellon University, Pittsburgh, PA, 2006.

Parallel Depth First vs. Work Stealing Schedulers on CMP Architectures.

V. Liaskovitis, S. Chen, P. B. Gibbons, A. Ailamaki, G. E. Blelloch, B. Falsafi, L. Fix, N. Hardavellas, M. Kozuch, T. C. Mowry and C. Wilkerson.

In Proceedings of the 18th Annual ACM International Symposium on Parallelism in Algorithms and Architectures (SPAA), pp. 330, Cambridge, MA, August 2006.

Simultaneous Pipelining in QPipe: Exploiting Work Sharing Opportunities Across Queries.

D. Dash, K. Gao, N. Hardavellas, S. Harizopoulos, R. Johnson, N. Mancheril, I. Pandis, V. Shkapenyuk and A. Ailamaki.

Demonstration, In Proceedings of the 22nd International Conference on Data Engineering (ICDE), Atlanta, GA, April 2006.

Best Demonstration Award.

2005

Store-Ordered Streaming of Shared Memory.

T. F. Wenisch, S. Somogyi, N. Hardavellas, J. Kim, C. Gniady, A. Ailamaki and B. Falsafi.

In Proceedings of the 14th International Conference on Parallel Architectures and Compilation Techniques (PACT), pp. 75-86, Saint Louis, MO, September 2005.

Temporal Streaming of Shared Memory.

T. F. Wenisch, S. Somogyi, N. Hardavellas, J. Kim, A. Ailamaki and B. Falsafi.

In Proceedings of the 32nd ACM/IEEE Annual International Symposium on Computer Architecture (ISCA), pp. 222-233, Madison, WI, June 2005.

2004

SORDS: Just-In-Time Streaming of Temporally-Correlated Shared Data.

T. Wenisch, S. Somogyi, N. Hardavellas, J. Kim, C. Gniady, A. Ailamaki and B. Falsafi.

Technical Report CALCM-TR-2004-002, Computer Architecture Lab, Carnegie Mellon University, Pittsburgh, PA, November 2004.

Memory Coherence Activity Prediction in Commercial Workloads.

S. Somogyi, T. F. Wenisch, N. Hardavellas, J. Kim, A. Ailamaki and B. Falsafi.

3rd Workshop on Memory Performance Issues (WMPI), pp. 37-45, Munich, Germany, June 2004.

SimFlex: a Fast, Accurate, Flexible Full-System Simulation Framework for Performance Evaluation of Server Architecture.

N. Hardavellas, S. Somogyi, T. F. Wenisch, R. E. Wunderlich, S. Chen, J. Kim, B. Falsafi, J. C. Hoe and A. Nowatzyk.

ACM SIGMETRICS Performance Evaluation Review (PER) Special Issue on Tools for Computer Architecture Research, Vol. 31(4), pp. 31-35, March 2004.

Software: Flexus,

a scalable, full-system, cycle-accurate simulation framework of multicore and multiprocessor systems.

2003 and prior

Adaptive Dirty-Block Purging.

S. C. Steely Jr. and N. Hardavellas.

U.S. patent 6,493,801, December 2002.

Apparatus and Method for Maintaining Data Coherence Within a Cluster of Symmetric Multiprocessors

L. I. Kontothanassis, M. L. Scott, N. Hardavellas, G. C. Hunt, R. J. Stets and S. Dwarkadas.

U.S. patent 6,341,339, January 2002.

The Implementation of Cashmere

R. J. Stets, D. Chen, S. Dwarkadas, N. Hardavellas, G. C. Hunt, L. Kontothanassis, G. Magklis, S. Parthasarathy, U. Rencuzogullari and M. L. Scott.

Technical Report TR 723, Computer Science Department, University of Rochester, Rochester, NY, December 1999.

Cashmere-VLM: Remote Memory Paging for Software Distributed Shared Memory.

S. Dwarkadas, N. Hardavellas, L. Kontothanassis, R. Nikhil and R. Stets.

In Proceedings of the 13th IEEE/ACM International Parallel Processing Symposium (IPPS), pp. 153-159, San Juan, Puerto Rico, April 1999.

Software Cache Coherence with Memory Scaling.

N. Hardavellas, L. Kontothanassis, R. Nikhil and R. J. Stets.

7th Workshop on Scalable Shared Memory Multiprocessors (SSMM), Barcelona, Spain, June 1998.

Understanding the Performance of DSM Applications.

W. Meira Jr., T. J. LeBlanc, N. Hardavellas and C. Amorim.

Communication and Architectural Support for Network-Based Parallel Computing (CANPC),

D. Panda and C. Stunkel Eds., Lecture Notes in Computer Science, Vol. 1199/1997, pp. 198-211, Springer Berlin/Heidelberg, February 1997, DOI: 10.1007/3-540-62573-9_15.

Cashmere-2L: Software Coherent Shared Memory on a Clustered Remote-Write Network.

R. J. Stets, S. Dwarkadas, N. Hardavellas, G. C. Hunt, L. Kontothanassis, S. Parthasarathy and M. L. Scott.

In Proceedings of the 16th ACM Symposium on Operating Systems Principles (SOSP), pp. 170-183, Saint Malo, France, October 1997.

VM-Based Shared Memory on Low-Latency, Remote-Memory-Access Networks.

L. Kontothanassis, G. C. Hunt, R. J. Stets, N. Hardavellas, M. Cierniak, S. Parthasarathy, W. Meira Jr., S. Dwarkadas and M. L. Scott.

In Proceedings of the 24th ACM/IEEE Annual International Symposium on Computer Architecture (ISCA), pp. 157-169, Denver, CO, June 1997.

Efficient Use of Memory Mapped Interfaces for Shared Memory Computing.

N. Hardavellas, G. C. Hunt, S. Ioannidis, R. J. Stets, S. Dwarkadas, L. Kontothanassis and M. L. Scott.

In IEEE CS Technical Committee on Computer Architecture (TCCA) Special Issue on Distributed Shared Memory, pp. 28-33, March 1997.

VM-Based Shared Memory on Low-Latency, Remote-Memory-Access Networks.

L. Kontothanassis, G. C. Hunt, R. J. Stets, N. Hardavellas, M. Cierniak, S. Parthasarathy, W. Meira Jr, S. Dwarkadas and M. L. Scott.

Technical Report TR 643, Computer Science Department, University of Rochester, Rochester, NY, November 1996.

The Implementation of Cashmere.

M. L. Scott, W. Li, L. Kontothanassis, G. C. Hunt, M. Michael, R. J. Stets, N. Hardavellas, W. Meira Jr., A. Poulos, M. Cierniak, S. Parthasarathy and M. Zaki.

6th Workshop on Scalable Shared Memory Multiprocessors (SSMM), Boston, MA, October 1996.

Contention in Counting Networks.

C. Busch, N. Hardavellas and M. Mavronicolas.

In Proceedings of the 13th ACM Annual Symposium on Principles of Distributed Computing (PODC), Los Angeles, CA, August 1994.

Notes on Sorting and Counting Networks.

N. Hardavellas, D. Karakos and M. Mavronicolas.

Distributed Algorithms (WDAG), A. Schiper Ed., Lecture Notes in Computer Science, Vol. 725/1993, pp. 234-248, Springer Berlin/Heidelberg, September 1993, DOI: 10.1007/3-540-57271-6_39.

Notes on Sorting and Counting Networks.

N. Hardavellas, D. Karakos and M. Mavronicolas.

Technical Report FORTH-ICS/TR-092, Institute of Computer Science, Foundation for Research and Technology - Hellas, Heraklion, Crete, Greece, July 1993.

Artifacts

The Antikythera mechanism, an ancient Greek astronomical calculator circa 87 BC, and arguably the first mechanical computer.

The Antikythera mechanism, an ancient Greek astronomical calculator circa 87 BC, and arguably the first mechanical computer.

Pinballs

SPEC2017 Pinballs: publicly distributed pinballs of SPEC CPU2017 SPECspeed benchmarks. The pinballs are collected for the Sniper architectural simulator and they are validated on CPI. December 2021.

If you use any component of this work or a derivative, please cite as follows:

Public Release and Validation of SPEC CPU2017 PinPoints.

Haiyang Han and Nikos Hardavellas.

arXiv preprint arXiv:2112.06981, December 2021.

Software

QuantEM:

a modular and extensible compiler designed to automate the integration of QED codes into

arbitrary quantum programs. The initial version of the compiler supports Pauli Check Sandwitching (PCS) techniques and Iceberg codes.

Please cite as follows:

QuantEM: The quantum error management compiler.

Ji Liu, Quinn Langfitt, Mingyoung Jessica Jeng, Alvin Gonzales, Noble Agyeman-Bobie, Kaiya Jones,

Siddharth Vijaymurugan, Daniel Dilley, Zain H. Saleem, Nikos Hardavellas, and Kaitlin N. Smith.

arXiv Quantum Physics (quant-ph) arXiv:2509.15505, September 2025.

Parsimony: an LLVM prototype implementation of the Parsimony

SPMD programming model and auto-vectorizing compiler (CGO 2023). Parsimony is a SPMD programming approach built with semantics

designed to be compatible with multiple languages and to cleanly integrate into the standard optimizing compiler toolchains.

The software artifact is a standalone compiler IR-to-IR pass within LLVM that can perform auto-vectorization independently of

other passes, improving the language and toolchain compatibility of SPMD programming.

The latest version of Parsimony is also provided as a public GitHub repository.

Please cite as follows:

Parsimony: Enabling SIMD/Vector Programming in Standard Compiler Flows.

Vijay Kandiah, Daniel Lustig, Oreste Villa, David Nellans and Nikos Hardavellas.

In Proceedings of the IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Montreal, Canada, February 2023.

CARMOT: an LLVM implementation of the Compiler and Runtime

Memory Observation Tool for Program State Element Characterization (CGO 2023). CARMOT is a compiler and parallel runtime co-design

that provides programmers with recommendations on how to properly apply modern programming language abstractions that are often hard

to reason about, such as transfer sets in OpenMP directives and C++ smart pointers.

Please cite as follows:

Program State Element Characterization.

Enrico Armenio Deiana, Brian Suchy, Michael Wilkins, Brian Homerding, Tommy McMichen, Katarzyna Dunajewski, Peter Dinda, Nikos Hardavellas and Simone Campanoni.

In Proceedings of the IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Montreal, Canada, February 2023.

WARDen: a software implementation of a cache coherence protocol that

capitalizes on the WARD property of Parallel ML to drive cache coherence (CGO 2023). The protocol is implemented within the Sniper

computer architecture simulator co-designed with the MPL runtime, and is provided within a VM image to contain all software dependencies.

Please cite as follows:

WARDen: Specializing Cache Coherence for High-Level Parallel Languages.

Michael Wilkins, Sam Westrick, Vijay Kandiah, Alex Bernat, Brian Suchy, Enrico Armenio Deiana, Simone Campanoni, Umut Acar, Peter Dinda and Nikos Hardavellas.

In Proceedings of the IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Montreal, Canada, February 2023.

SupermarQ: a scalable, hardware-agnostic quantum benchmark

suite which uses application-level metrics to measure the performance of modern NISQ quantum computers.

Best Paper Award.

IEEE MICRO Top Picks Honorable Mention, 2023.

Please cite as follows:

SupermarQ: A Scalable Quantum Benchmark Suite.

Teague Tomesh, Pranav Gokhale, Victory Omole, Gokul Subramanian Ravi, Kaitlin Smith, Joshua Viszlai, Xin-Chuan Wu,

Nikos Hardavellas, Margaret R. Martonosi and Fred Chong.

In Proceedings of the 28th IEEE International Symposium on High-Performance Computer Architecture (HPCA),

Seoul, South Korea, February 2022.

CARAT CAKE: an aerokernel (Nautilus) running with a CARAT

address space abstraction (PLDI 2020) implemented in LLVM. CARAT CAKE replaces paging in the kernel with a system that can

operate using only physical addresses, with memory management delegated explicitly to the compiler with support by the runtime.

Please cite as follows:

CARAT CAKE: Replacing Paging via Compiler/Kernel Cooperation.

Brian Suchy, Souradip Ghosh, Aaron Nelson, Zhen Huang, Drew Kersnar, Siyuan Chai, Michael Cuevas, Alex Bernat,

Gaurav Chaudhary, Nikos Hardavellas, Simone Campanoni, and Peter Dinda.

In Proceedings of the 2022 Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS),

Lausanne, Switzerland, March 2022.

AccelWattch: a microbenchmark-based quadratic programming framework for the power modeling of GPUs, and an accurate power model for NVIDIA Quadro Volta GV100.

Please cite as follows:

AccelWattch: A Power Modeling Framework for Modern GPUs.

Vijay Kandiah, Scott Peverelle, Mahmoud Khairy, Junrui Pan, Amogh Manjunath, Timothy G. Rogers, Tor M. Aamodt and Nikos Hardavellas.

In Proceedings of the 54th IEEE/ACM International Symposium on Microarchitecture (MICRO), Athens, Greece, October 2021.

- AccelWattch Zenodo DOI for the AccelWattch MICRO-2021 artifact.

- AccelWattch GitHub link for the latest version of the AccelWattch sources and framework integrated into Accel-Sim, including microbenchmarks and validation benchmarks.

TPAL:

a task-parallel assembly language for heartbeat scheduling that

dramatically reduces the overheads of parallelism without compromising scalability.

Please cite as follows:

Task Parallel Assembly Language for Uncompromising Parallelism.

M. Rainey, P. Dinda, K. Hale, R. Newton, U. A. Acar, N. Hardavellas, S. Campanoni.

In Proceedings of the 42nd ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI), June 2021.

SoftInj:

a software fault injection library that implements the b-HiVE error models.

Please cite as follows:

b-HiVE: A Bit-Level History-Based Error Model with Value Correlation for Voltage-Scaled Integer and Floating Point Units.

G. Tziantzioulis, A. M. Gok, S. M. Faisal, N. Hardavellas, S. Memik and S. Parthasarathy.

In Proceedings of the Design Automation Conference (DAC), San Francisco, CA, June 2015.

Shore-MT, a scalable storage manager for the multicore era.

Test-of-Time Award, EDBT 2019.

Please cite as follows:

Shore-MT: A Scalable Storage Manager for the Multicore Era.

R. Johnson, I. Pandis, N. Hardavellas, A. Ailamaki and B. Falsafi.

In Proceedings of the 12th International Conference on Extending Database Technology (EDBT), pp. 24-35, Saint-Petersburg, Russia, March 2009.

Flexus,

a scalable, full-system, cycle-accurate simulation framework of multicore and multiprocessor systems.

Please cite as follows:

SimFlex: a Fast, Accurate, Flexible Full-System Simulation Framework for Performance Evaluation of Server Architecture.

N. Hardavellas, S. Somogyi, T. F. Wenisch, R. E. Wunderlich, S. Chen, J. Kim, B. Falsafi, J. C. Hoe and A. Nowatzyk.

ACM SIGMETRICS Performance Evaluation Review (PER) Special Issue on Tools for Computer Architecture Research, Vol. 31(4), pp. 31-35, March 2004.

Datasets

AccelWattch Dataset: a complete dataset, pre-compiled binaries, instruction traces, scripts, xls files, and step-by-step instructions to reproduce the key results in the AccelWattch MICRO 2021 paper.

AccelWattch is a microbenchmark-based quadratic programming framework for the power modeling of GPUs, and an accurate power model for NVIDIA Quadro Volta GV100.

Please cite as follows:

AccelWattch: A Power Modeling Framework for Modern GPUs.

Vijay Kandiah, Scott Peverelle, Mahmoud Khairy, Junrui Pan, Amogh Manjunath, Timothy G. Rogers, Tor M. Aamodt and Nikos Hardavellas.

In Proceedings of the 54th IEEE/ACM International Symposium on Microarchitecture (MICRO), Athens, Greece, October 2021.

b-HiVE Hardware Characterization Dataset:

a raw dataset of full-analog HSIM and SPICE simulations of industrial-strength 64-bit integer ALUs, integer multipliers, bitwise logic operations,

FP adders, FP multipliers, and FP dividers from OpenSparc T1 across voltage domains, along with controlled value correlation experiments.

Please cite as follows:

b-HiVE: A Bit-Level History-Based Error Model with Value Correlation for Voltage-Scaled Integer and Floating Point Units.

G. Tziantzioulis, A. M. Gok, S. M. Faisal, N. Hardavellas, S. Memik and S. Parthasarathy.

In Proceedings of the Design Automation Conference (DAC), San Francisco, CA, June 2015.

Presentations

Honors & Awards

Computer Science Social Initiative (CSSI) Ambassador Awards, Northwestern University, 2025.

Jessica Jeng.

Future CRA Leader, Computing Research Association, 2024.

Nikos Hardavellas.

Chookaszian Fellowship, Northwestern University, 2023-2024.

Jessica Jeng.

Northwestern Academic Year Undergraduate Research Award, 2023. A Compiler for Quantum Chiplets.

Nikola Maruszewski.

McCormick Summer Undergraduate Research Award, 2023. A Compiler for Quantum Chiplets.

Nikola Maruszewski.

IEEE Micro Top Picks Honorable Mention, 2023.

SupermarQ: A Scalable Quantum Benchmark Suite.

T. Tomesh, P. Gokhale, V. Omole, G. S. Ravi, K. N. Smith, J. Viszlai, X.-C. Wu, N. Hardavellas, M. Martonosi and F. T. Chong.

The Top Picks awards recognize

"the year's most significant research papers in computer architecture based on novelty and long-term impact"

across all computer architecture conferences.

Associated Student Government Faculty & Administrator Honor Roll, 2021-2022.

Nikos Hardavellas.

Nominated by the undergraduate student body for contributions to COMP_SCI 213: Introduction to Computer Systems.

Faculty Service Award, Northwestern University, Department of Computer Science, 2022.

Nikos Hardavellas.

Best Paper Award, The 28th IEEE International Symposium on High-Performance Computer Architecture (HPCA-28), 2022,

SupermarQ: A Scalable Quantum Benchmark Suite.

Teague Tomesh, Pranav Gokhale, Victory Omole, Gokul Subramanian Ravi, Kaitlin N. Smith, Joshua Viszlai, Xin-Chuan Wu, Nikos Hardavellas, Margaret Martonosi and Frederic T. Chong.

Best Paper Award Nomination, ACM/IEEE International Symposium on Low Power Electronics and Design (ISLPED), 2021,

Pho$: A Case for Shared Optical Cache Hierarchies.

Haiyang Han, Theoni Alexoudi, Chris Vagionas, Nikos Pleros and Nikos Hardavellas.

Terminal Year Fellowship, Northwestern University, 2021.

Haiyang (Drake) Han.

Test-of-Time Award, International Conference on Extending Database Technology (EDBT), 2019,

Shore-MT: A Scalable Storage Manager for the Multicore Era.

R. Johnson, I. Pandis, N. Hardavellas, A. Ailamaki and B. Falsafi.

Article originally appeared in EDBT 2009.

Royal E. Cabell Fellowship, Northwestern University, 2019.

Michael Wilkins.

Terminal Year Fellowship, Northwestern University, 2017.

George Tziantzioulis.

Best Ph.D. Dissertation Award in Computer Engineering, Northwestern University, 2016.

High-Performance and Energy-Efficient Computer System Design Using Photonic Interconnects.

Yigit Demir.

NSF CAREER Award, The National Science Foundation (NSF), CISE:CCF:SHF, 2015.

Energy-Efficient and Energy-Proportional Silicon-Photonic Manycore Architectures.

Nikos Hardavellas.

Royal E. Cabell Fellowship, Northwestern University, 2015.

Haiyang (Drake) Han.

Best Computer Engineering Poster Award, EECS Fair, Northwestern University, 2015.

b-HiVE: A Bit-Level History-Based Error Model with Value Correlation for Voltage-Scaled Integer and Floating Point Units.

Georgios Tziantzioulis, Ali Murat Gok and Nikos Hardavellas.

Second Computer Engineering Poster Award, EECS Fair, Northwestern University, 2015.

Towards Energy-Efficient Photonic Interconnects.

Yigit Demir and Nikos Hardavellas.

Second Computer Engineering Poster Award, EECS Fair, Northwestern University, 2014.

EcoLaser: Adaptive Laser Control for Energy Efficient On-Chip Photonic Interconnects.

Yigit Demir and Nikos Hardavellas.

Third EECS Poster Award, EECS Fair, Northwestern University, 2013.

Galaxy: Pushing the Power and Bandwidth Walls with Optically-Connected Disintegrated Processors.

Yigit Demir and Nikos Hardavellas.

Fellow, Searle Center for Teaching Excellence, 2012, Northwestern University.

Nikos Hardavellas.

IEEE Micro Spotlight Paper, February 2012. Toward Dark Silicon in Servers.

N. Hardavellas, M. Ferdman, B. Falsafi and A. Ailamaki.

Article originally appeared in IEEE Micro Special Issue on Big Chips, July/August 2011.

Morrison Fellowship, Northwestern University, 2011.

Yigit Demir.

Undergraduate Research Award, Northwestern University, 2011.

Sourya Roy.

Keynote Talk, 9th International Symposium on Parallel and Distributed Computing (ISPDC), 2010.

When Core Multiplicity Doesn't Add Up.

Nikos Hardavellas.

IEEE Micro Top Picks, 2010.

Near-Optimal Cache Block Placement with Reactive Nonuniform Cache Architectures.

N. Hardavellas, M. Ferdman, B. Falsafi and A. Ailamaki.

The Top Picks awards recognize

"the year's most significant research papers in computer architecture based on novelty and long-term impact"

across all computer architecture conferences.

Undergraduate Research Award, Northwestern University, 2010.

Eric Anger.

June and Donald Brewer Chair, 2009-2011. Northwestern University.

Nikos Hardavellas.

Best Demonstration Award, 22nd IEEE International Conference on Data Engineering (ICDE), 2006.

Simultaneous Pipelining in QPipe: Exploiting Work Sharing Opportunities Across Queries.

D. Dash, K. Gao, N. Hardavellas, S. Harizopoulos, R. Johnson, N. Mancheril, I. Pandis, V. Shkapenyuk and A. Ailamaki.

Nation Merit Scholarship, Northwestern University, 2006.

Mathew Lowes.

Technical Award for Contributions to the Alpha Microprocessor, 2000.

Compaq Computer Corporation, Marlborough, MA.

Nikos Hardavellas.

FORTH Fellowship, 1993-1995.

Foundation for Research and Technology - Hellas (FORTH), Greece.

Nikos Hardavellas.

Media Coverage

The Quantum Insider.

Researchers Say Quantum Compiler Boosts Speed And Reliability For Chiplet-Based Modular Systems.

January 22, 2025

Semiconductor Engineering.

Parallelized Compilation Pipeline Optimized for Chiplet-Based Quantum Computers.

January 21, 2025

Flipboard. Applying classical benchmarking methodologies to create a principled quantum benchmark suite | Amazon Web Services. March 16, 2022

AWS Quantum Computing Blog. Applying classical benchmarking methodologies to create a principled quantum benchmark suite. March 15, 2022

Protivi, The Post-Quantum World PODCAST. Benchmarking Quantum Computers with Super.tech. March 9, 2022 (also available in audible, Apple Podcasts, ivoox, Spreaker, Podcast Addict)

EPiQC. Super.tech/EPiQC Research Informs New Suite of Benchmarks for Quantum Computers. February 28, 2022

The DeepTech Insider. Chicago-based Super.tech Releases SupermarQ – A New Suite of Benchmarks for Quantum Computers. February 27, 2022

ExBulletin. Super.tech Secures $1.65 Million Grant and Announces New Benchmark Auite for Quantum Computers. February 25, 2022

HPC wire. Super.tech Releases SupermarQ – A New Suite of Benchmarks for Quantum Computers. February 24, 2022

CB Insights, IonQ. Two New Quantum Benchmarking Suites Announced by IonQ and Super.Tech. February 24, 2022

The Quantum Observer. Chicago-based Super.tech Releases SupermarQ – A New Suite of Benchmarks for Quantum Computers. February 24, 2022

The Polsky Center for Entrepreneurship and Innovation, University of Chicago. Super.tech Secures $1.65M Grant and Launches New Benchmarking Suite for Quantum Computers. February 24, 2022

HESHMore. Chicago-based Super.tech Releases SupermarQ – A New Suite of Benchmarks for Quantum Computers. February 24, 2022

The Quantum Insider. Chicago-based Super.tech Releases SupermarQ – A New Suite of Benchmarks for Quantum Computers. February 24, 2022

Quantum Computing Report. Two New Quantum Benchmarking Suites Announced by IonQ and Super.Tech. February 23, 2022

BSG. The Race to Quantum Advantage Depends on Benchmarking. February 23, 2022

Newsbreak. SupermarQ: A Scalable Quantum Benchmark Suite. February 22, 2022

Intel Developer Zone. Intel Parallel Computing Center at Northwestern University. December 19, 2014

MIT News. Smarter Caching. February 19, 2014

Computing Now. Software Engineering – The Impact of Dynamic Directories on Multicore Interconnects. October 9, 2013

IEEE Software. Srini Devadas speaks with author Nikos Hardavellas on The Impact of Dynamic Directories on Multicore Interconnects. October 4, 2013

Funding

NSF SPX-2119069.

Collaborative Research: PPoSS: LARGE: Unifying Software and Hardware to Achieve Performant and Scalable Frictionless Parallelism in the Heterogeneous Future.

Peter A. Dinda, Nikos Hardavellas, Simone Campanoni, Umut Acar (CMU), Guy Blelloch (CMU), 2020–2022

NSF SPX-2028851.

Collaborative Research: PPoSS: Planning: Unifying Software and Hardware to Achieve Performant and Scalable Zero-cost Parallelism in the Heterogeneous Future.

Peter A. Dinda, Nikos Hardavellas, Simone Campanoni, Umut Acar (CMU), Michael Rainey (CMU), Kyle C. Hale (IIT), 2020–2022

NSF CNS-1763743.

CSR: Medium: Collaborative Research: Interweaving the Parallel Software/Hardware Stack.

Peter A. Dinda, Simone Campanoni, Nikos Hardavellas, Kyle C. Hale (IIT), 2018–2022

NSF CCF-1453853.

CAREER: Energy-Efficient and Energy-Proportional Silicon-Photonic Manycore Architectures.

Nikos Hardavellas, 2015–2021

NSF CCF-1218768.

SHF: Small: Collabroative Research: Elastic Fidelity: Trading-off Computational Accuracy for Energy Efficiency.

Nikos Hardavellas, Seda Ogrenci-Memik, Srinivasan Parthasarathy (OSU), 2012–2015

Argonne National Laboratory subcontract.

Exploring Machine Learning-based Approaches to Auto-tuning Distributed Memory Communication.

Peter A. Dinda and Nikos Hardavellas, 2021–2023

Academic Year Undergraduate Research Grant.,

Northwestern University.

A Compiler for Chiplet-Based Quantum Systems.

Nino Maruszewski (adv. Nikos Hardavellas), 2023

McCormick Summer Undergraduate Research Award.

A Compiler for Chiplet-Based Quantum Systems.

Nino Maruszewski (adv. Nikos Hardavellas), 2023

McCormick Equipment Fund and

Department of Computer Science.

A Heterogeneous Hardware Testbed for Systems and Security Research.

Nikos Hardavellas, Peter Dinda, Simone Campanoni, and Yan Chen, 2022

AMD Vivado ML Enterprise Edition Multi-seat License Donation, 2023–2024

ISEN, Booster Award.

Toward Energy-Efficient Computing on Dark Silicon.

Nikos Hardavellas, 2013–2014

Intel Parallel Computing Center.

Nikos Hardavellas, Vadim Linetsky, Diego Klabjan, Jeremy C. Staum, 2014

Allinea Performance Analysis Software License Donation, 2015–2016

Synopsys, Semiconductor IP License Donation, 2010–2015

Cadence, Tensilica XTensa Processor Generator Software License Donation, 2013–2015

Mentor Graphics, FloTHERM/Icepack Software License Donation, 2012–2015

Windriver, Simics Software License Donation, 2009–2015

Team

Raphael's School of Athens, depicting Leonardo da Vinci as Plato, Michelangelo as Heraclitus,

Bramante as Euclid, Aristotle, Pythagoras, Archimedes, Socrates, Anaximander, Parmenides, Diogenes, Ptolemy,

and Democritus, among others.

Raphael's School of Athens, depicting Leonardo da Vinci as Plato, Michelangelo as Heraclitus,

Bramante as Euclid, Aristotle, Pythagoras, Archimedes, Socrates, Anaximander, Parmenides, Diogenes, Ptolemy,

and Democritus, among others.

Faculty

Nikos Hardavellas, Professor, CS & ECE

Ph.D. Students

Jessica Jeng

Mu-Te Lau

Atmn Patel

Yuchen Zhu

M.S. Students

Undergraduate Students

Josh Karpel, Computer Engineering

Alumni (Ph.D.)

Michael Wilkins (co-advised with Peter Dinda)

Ph.D. March 2024. On Transparent Optimizations for Communication in Highly Parallel Systems.

First employment: Cornelis Networks

Vijay Kandiah

Ph.D. November 2023. Uncovering Latent Hardware/Software Parallelism.

First employment: Nvidia

Haiyang (Drake) Han

Ph.D. May 2022. High-performance and Energy-efficient Computing Systems Using Photonics.

First employment: Apple

Ali Murat Gok